英国发布《法官使用AI指南》

Artificial Intelligence (AI) Guidance for Judicial Office Holders

人工智能(AI)司法官员指南

12 December 2023

2023年12月12日

Introduction

引言

This guidance has been developed to assist judicial office holders in relation to the use of Artificial Intelligence (AI).

本指南旨在帮助司法官员使用人工智能(AI)。

It sets out key risks and issues associated with using AI and some suggestions for minimising them. Examples of potential uses are also included.

本文阐明使用AI相关的主要风险和问题,并提供了一些最小化这些风险的建议。同时也包括了潜在用途的示例。

Any use of AI by or on behalf of the judiciary must be consistent with the judiciary’s overarching obligation to protect the integrity of the administration of justice.

司法部门或代表司法部门使用AI必须与司法的总体义务一致,即保护司法行政的完整性。

This guidance applies to all judicial office holders under the Lady Chief Justice and Senior President of Tribunal’s responsibility, their clerks and other support staff.

本指南适用于女首席法官和法庭高级庭长管辖下的所有司法官员、书记员和其他支持人员。

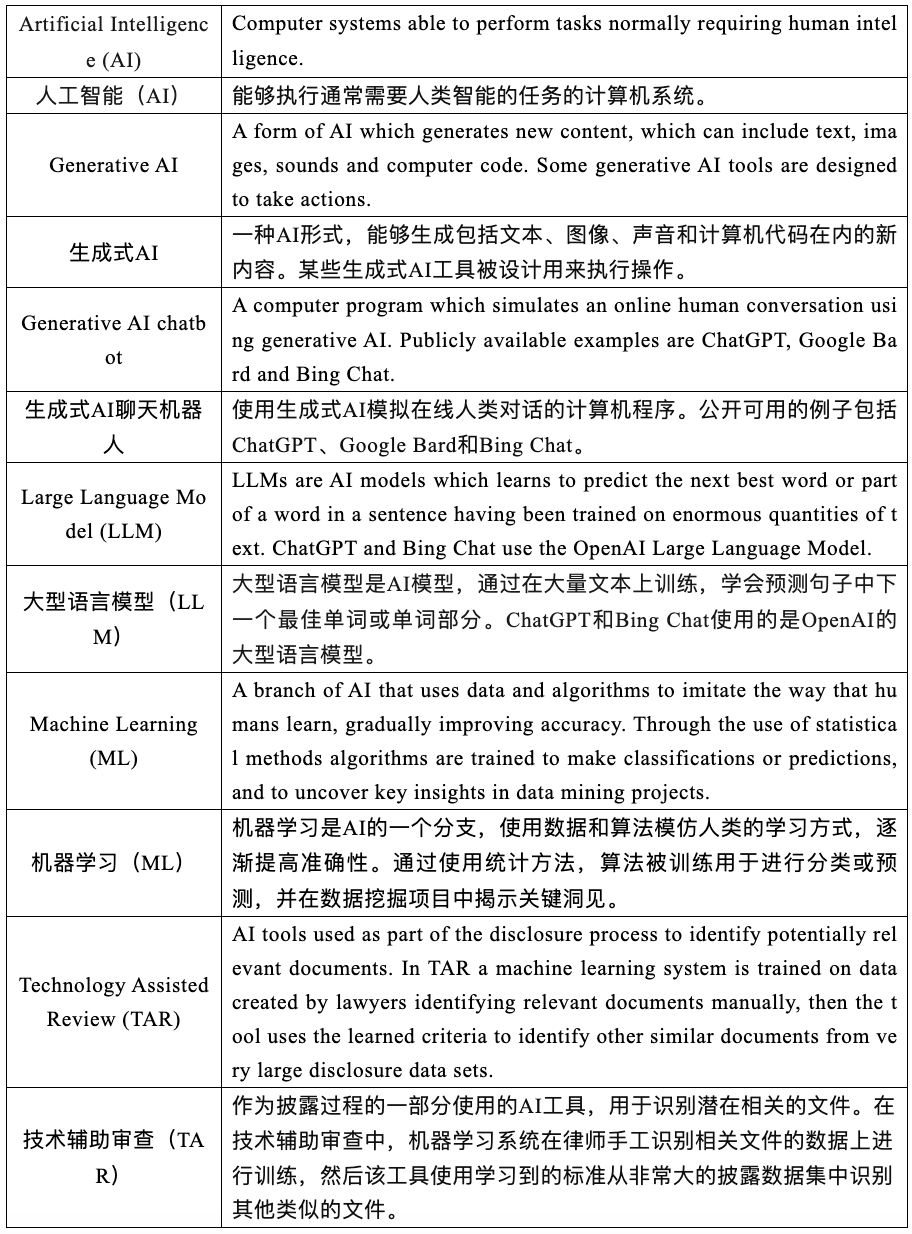

Common Terms

常用术语

Guidance for responsible use of AI in Courts and Tribunals

法院负责任地使用AI的指导

1) Understand AI and its applications

1)了解AI及其应用

Before using any AI tools, ensure you have a basic understanding of their capabilities and potential limitations.

在使用任何AI工具之前,确保您对它们的功能和潜在局限有基本了解。

Some key limitations:

以下是一些关键局限:

·Public AI chatbots do not provide answers from authoritative databases. They generate new text using an algorithm based on the prompts they receive and the data they have been trained upon. This means the output which AI chatbots generate is what the model predicts to be the most likely combination of words (based on the documents and data that it holds as source information). It is not necessarily the most accurate answer.

·面向公众的AI聊天机器人不提供来自权威数据库的答案。它们使用基于收到的提示和它们基于数据训练的算法生成新文本。这意味着AI聊天机器人生成的输出是模型预测的最可能的词组合(基于它作为源信息持有的文件和数据)。这并不一定是最准确的答案。

·As with any other information available on the internet in general, AI tools may be useful to find material you would recognise as correct but have not got to hand, but are a poor way of conducting research to find new information you cannot verify. They may be best seen as a way of obtaining non-definitive confirmation of something, rather than providing immediately correct facts.

·与互联网上的任何其他信息一样,一般来说,AI工具可能有助于找到你认为正确但手头没有的材料,但却不是寻找无法验证的新信息的好方法。它们最好被视为获取某事的非决定性确认的方式,而不是提供立即正确的事实。

·The quality of any answers you receive will depend on how you engage with the relevant AI tool, including the nature of the prompts you enter. Even with the best prompts, the information provided may be inaccurate, incomplete, misleading, or biased.

·您收到的任何答案的质量将取决于您如何使用相关的AI工具,包括您输入的提示的性质。即使是最好的提示,提供的信息也可能是不准确的、不完整的、误导的或有偏见的。

·The currently available LLMs appear to have been trained on material published on the internet. Their “view” of the law is often based heavily on US law although some do purport to be able to distinguish between that and English law.

·目前可用的LLMs似乎是在互联网上发布的材料上进行训练的。它们对法律的“看法”通常基于美国法律,尽管有些LLMs声称能够区分美国法律和英国法律。

2) Uphold confidentiality and privacy

2)维护保密性和隐私

Do not enter any information into a public AI chatbot that is not already in the public domain. Do not enter information which is private or confidential. Any information that you input into a public AI chatbot should be seen as being published to all the world.

The current publicly available AI chatbots remember every question that you ask them, as well as any other information you put into them. That information is then available to be used to respond to queries from other users. As a result, anything you type into it could become publicly known.

不要向面向公众的AI聊天机器人输入任何非公开领域的信息。不要输入私人或保密信息。您输入到面向公众的AI聊天机器人中的任何信息都应被视为向全世界公开。

You should disable the chat history in AI chatbots if this option is available. This option is currently available in ChatGPT and Google Bard but not yet in Bing Chat.

如果有可能,您应该在AI聊天机器人中禁用聊天记录。目前ChatGPT和Google Bard提供了这个选项,但Bing Chat尚未提供。

Be aware that some AI platforms, particularly if used as an App on a smartphone, may request various permissions which give them access to information on your device. In those circumstances you should refuse all such permissions.

请注意,某些AI平台,特别是作为智能手机上的应用程序使用时,可能会请求各种权限,从而访问您设备上的信息。在这种情况下,您应拒绝所有此类权限。

In the event of unintentional disclosure of confidential or private information you should contact your leadership judge and the Judicial Office. If the disclosed information includes personal data the disclosure should reported as a data incident.

如果不小心披露了保密或私人信息,您应联系您的上级法官和司法办公室。如果泄漏的信息包括个人数据,应将其报告为数据事件。

In future AI tools designed for use in the courts and tribunals may become available but, until that happens, you should treat all AI tools as being capable of making public anything entered into them.

未来,专为法院使用而设计的AI工具可能会变得可用,但在那之前,您应将所有AI工具视为能够公开您输入其中的任何内容。

3) Ensure accountability and accuracy

3)确保责任和准确性

The accuracy of any information you have been provided by an AI tool must be checked before it is used or relied upon.

在使用或依赖AI工具提供的任何信息之前,必须检查其准确性。

Information provided by AI tools may be inaccurate, incomplete, misleading or out of date. Even if it purports to represent English law, it may not do so.

AI工具提供的信息可能是不准确、不完整、误导或过时的。即使它声称适用英国法律,也可能不是这样。

AI tools may:

AI工具可能:

make up fictitious cases, citations or quotes, or refer to legislation, articles or legal texts that do not exist

编造虚构的案例、引用或引文,或引用不存在的法律、文章或法律文本

provide incorrect or misleading information regarding the law or how it might apply, and

提供有关法律或法律适用的错误或误导性的信息

make factual errors

产生事实性错误

4) Be aware of bias

4)注意偏见

AI tools based on LLMs generate responses based on the dataset they are trained upon. Information generated by AI will inevitably reflect errors and biases in its training data.

基于LLM的AI工具根据其训练的数据集生成响应。AI生成的信息将不可避免地反映其训练数据中的错误和偏见。

You should always have regard to this possibility and the need to correct this.

您应始终注意这种可能性和纠正这种情况的需要。

5) Maintain security

5)保持安全

Follow best practices for maintaining your own and the court/tribunals’ security.

遵循维护自身和法院安全的最佳实践。

Use work devices (rather than personal devices) to access AI tools.

使用工作设备(而非个人设备)访问AI工具。

Use your work email address.

使用您的工作电子邮件地址。

If you have a paid subscription to an AI platform, use it. (Paid subscriptions have been identified as generally more secure than non‑paid). However, beware that there are a number of 3rd party companies that licence AI platforms from others and are not as reliable in how they may use your information. These are best avoided.

如果您订阅了AI平台的付费服务,请使用它(付费订阅通常被认为比非付费订阅更安全)。然而,请注意,有许多第三方公司从其他公司获得AI平台的授权,并且在使用您的信息方面不那么可靠。最好避免使用这些服务。

If there has been a potential security breach, see (2) above.

如果出现潜在的安全漏洞,请参见上文的(2)。

6) Be aware that court/tribunal users may have used AI tools

6)注意法院参与方可能已使用AI工具

Some kinds of AI tools have been used by legal professionals for a significant time without difficulty. For example, TAR is now part of the landscape of approaches to electronic disclosure. Leaving aside the law in particular, many aspects of AI are already in general use for example in search engines to auto-fill questions, in social media to select content to be delivered, and in image recognition and predictive text.

一些AI工具已被法律专业人士长时间无障碍使用。例如,技术辅助审查(TAR)现已成为电子发现方法的一部分。撇开法律不谈,许多AI方面已在一般用途中得到普遍使用,例如在搜索引擎中自动填充问题,在社交媒体中选择要传递的内容,在图像识别和预测文本中使用。

All legal representatives are responsible for the material they put before the court/tribunal and have a professional obligation to ensure it is accurate and appropriate. Provided AI is used responsibly, there is no reason why a legal representative ought to refer to its use, but this is dependent upon context.

所有法律代理人对于他们提交给法院的材料负有责任,并有职业义务确保其准确性和适当性。只要负责任地使用AI,法律代理人没有理由必须提及其使用,但这取决于具体情况。

Until the legal profession becomes familiar with these new technologies, however, it may be necessary at times to remind individual lawyers of their obligations and confirm that they have independently verified the accuracy of any research or case citations that have been generated with the assistance of an AI chatbot.

然而,在法律界熟悉这些新技术之前,有时可能需要提醒个别律师他们的义务,并确认他们已经独立验证了在AI聊天机器人协助下生成的任何研究或案例引用的准确性。

AI chatbots are now being used by unrepresented litigants. They may be the only source of advice or assistance some litigants receive. Litigants rarely have the skills independently to verify legal information provided by AI chatbots and may not be aware that they are prone to error. If it appears an AI chatbot may have been used to prepare submissions or other documents, it is appropriate to inquire about this, and ask what checks for accuracy have been undertaken (if any). Examples of indications that text has been produced this way are shown below.

AI聊天机器人现在被未聘请律师的当事人使用。对于某些诉讼参与人来说,这可能是他们得到的唯一建议或帮助。诉讼参与人很少有独立验证AI聊天机器人提供的法律信息的技能,并可能不知道它们容易出错。如果看起来AI聊天机器人可能已用于准备提交材料或其他文件,应询问此事并确定人是否进行了准确性检查,如果有的话,还需额外确认准确性测试是否是恰当的。以下是表明文本可能以这种方式生成的迹象。

AI tools are now being used to produce fake material, including text, images and video. Courts and tribunals have always had to handle forgeries, and allegations of forgery, involving varying levels of sophistication. Judges should be aware of this new possibility and potential challenges posed by deepfake technology.

AI工具现在被用来制造包括文本、图像和视频在内的虚假材料。法院一直以来都需要处理不同复杂程度的伪造和伪造指控。法官应意识到这一新可能性及深度伪造技术所带来的潜在挑战。

Examples: Potential uses and risks of Generative AI in Courts and Tribunals

示例:法院使用生成式AI的潜在用途和风险

Potentially useful tasks

潜在有使用价值的任务

·AI tools are capable of summarising large bodies of text. As with any summary, care needs to be taken to ensure the summary is accurate.

·AI工具能够总结大量文本。与任何总结一样,需要注意确保总结的准确性。

·AI tools can be used in writing presentations, e.g. to provide suggestions for topics to cover.

·AI工具可用于撰写演讲,例如为需要涵盖的主题提供建议。

·Administrative tasks like composing emails and memoranda can be performed by AI.

·AI可执行撰写电子邮件和备忘录等行政任务。

Tasks not recommended

不被推荐用于完成的任务

·Legal research: AI tools are a poor way of conducting research to find new information you cannot verify independently. They may be useful as a way to be reminded of material you would recognise as correct.

·法律研究:AI工具是寻找无法独立验证的新信息的糟糕方式。它们可能可以用作提醒您认为正确的材料的方式。

·Legal analysis: the current public AI chatbots do not produce convincing analysis or reasoning.

·法律分析:当前面向公众的AI聊天机器人不会产生令人信服的分析或推理。

Indications that work may have been produced by AI:

可能表明工作是由AI生成的迹象:

·references to cases that do not sound familiar, or have unfamiliar citations (sometimes from the US)

·引用不常见的案例(有时来自美国)

·parties citing different bodies of case law in relation to the same legal issues

·当事方就同一法律问题引用不同的案例法;

·submissions that do not accord with your general understanding of the law in the area

·提交的内容与您对该领域法律的一般理解不符;

·submissions that use American spelling or refer to overseas cases, and

·提交的内容使用美国拼写或提及海外案例;

·content that (superficially at least) appears to be highly persuasive and well written, but on closer inspection contains obvious substantive errors.

·内容(至少表面上)看起来极具说服力和写作精良,但仔细检查后包含明显的实质性错误。

-

上一篇:

-

下一篇:

- 英国竞争和市场监管局加强AI的反垄断监管

- USPTO warns patent lawyers not to pass off AI inventions as human

- Italy considers tougher penalties for AI-related crimes

- Artificial Intelligence Act

- 促进AI合成数据共享的商业秘密制度应对